Accessibility 201: A Dive Through The Windows Stack

A turtle swimming up to the shoreline after a dive in the ocean.

(Credit: memedboulala - CC BY-SA 4.0)

[Disclaimer: at the time of writing, I am a Software Engineer at Microsoft Corp. in Vancouver, BC.

However, I am never speaking on behalf of my employer, therefore opinions and thoughts are solely mine]

About a month ago, I wrote about the Big Picture for accessibility in software. If you didn't get to read through it, the main takeaways are:

- Accessibility in software is all about adapting the experience to different users needs,

- Most junior software developers are often ill-equipped in dealing with accessibility issues because there is little formal training,

- It is a significant design and technical challenge to make an app accessible, and empathy is essential in understanding why accessbility is so important

So we know we want to make our apps accessible, but, just how do we do that? What kind of data structures and functions do we need in order to have a screen reader actually read the screen? How can we follow what's in focus? And, more importantly, how can we do all of this in the most efficient way for developers and consumers alike?

As previously mentioned, accessibility is a design and technical challenge. In this article, I'll be doing a deep dive on the technical side of things and provide more information about the systems within the OS that provide a foundation for accessible software. If you're not technically inclined, you might want to stop reading now. From the disclaimer, you can guess most of my experience in this regard comes from Windows and Microsoft products that I've had the chance to contribute to and develop. We'll be focusing on Windows APIs, but the high-level technical concepts are likely to extend to major Linux Desktop Environments. Furthermore, Accessibility in the web works on top of this API foundation, but there are a couple more interesting tidbits to mention there (which I hope I'll write down in a later blog post).

For now, let's dive into Microsoft Windows and it's Accessible Interfaces

Communication is key

To make an application accessible, we need to allow it to interact with a number of other software that can adapt the experience to a user with an accessibility need. This can include capturing focus, raising alerts and notifications or just passively exposing descriptive information about the UI. Windows Narrator is one of the key pieces of software within Windows that will consume those alerts, notifications and information of any and all applications (including the operating system) in order to describe the current state of the screen to users that are visually impaired so they can navigate any application and the entire OS with confidence. Windows Narrator isn't the only software that can do this though. NVDA is an alternative open-source screen reader with much the same purpose as Narrator.

Here's the problem though: how do we have completely different pieces of software, written in possibly different languages, running in separate processes communicate with each other? The solution is in Inter-process communication (IPC), a mechanism the operating system provides in a couple of different constructs and protocols. While these systems have been around in various forms, there are accessibility-specific APIs for Windows that are consolidated in Windows 10 as Microsoft UI Automation.

COM, IAccessible and Microsoft UI Automation

interface IUnknown {

virtual HRESULT QueryInterface (REFIID riid, void **ppvObject) = 0;

virtual ULONG AddRef () = 0;

virtual ULONG Release () = 0;

};C++ snippet of COM's IUnknown Interface.

Accessibility, and other major OS features, in Windows are built on top of an IPC framework known as the Component Object Model (COM). It enables IPC by providing a stable Application Binary Interface (ABI) through which separate programs can share objects across binary (.exe, .dll) boundaries. COM is worth mentioning (at least briefly) because it enabled programs to do interop with the Operating System and between each other without needing to care about C++ compiler versions (this was very important in the early 90s!).

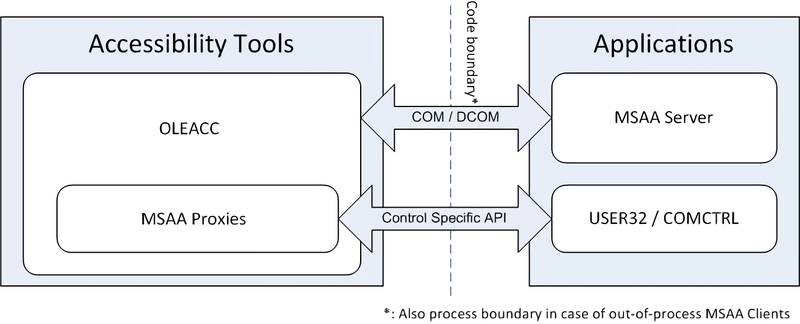

MSAA Architecture diagram showing the code boundary and COM as the bidirectional IPC method.

(Image is Public Domain)

The concepts of COM and its quintessential interface (IUnknown) are the foundation of major Windows Components, including Microsoft Active Accessibility (MSAA). Publicly introduced in 1997 for Windows 95, MSAA is a COM API for exposing and controlling the UI, aimed at Assistive Technologies (AT) of the day. It presents a client/server architecture where a user applications acts as the server and can create objects specifically to describe their internal Window Handle (HWND) elements as they're being shown to the user. MSAA clients (like screen readers), could now query basic metadata about the window and interact with it programatically.

DEFINE_GUID(IID_IAccessible, 0x618736e0, 0x3c3d, 0x11cf, 0x81,0x0c, 0x00,0xaa,0x00,0x38,0x9b,0x71);

#if defined(__cplusplus) && !defined(CINTERFACE)

MIDL_INTERFACE("618736e0-3c3d-11cf-810c-00aa00389b71")

IAccessible : public IDispatch

{

virtual HRESULT STDMETHODCALLTYPE get_accParent(IDispatch **ppdispParent) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accChildCount(LONG *pcountChildren) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accChild(VARIANT varChildID, IDispatch **ppdispChild) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accName(VARIANT varID, BSTR *pszName) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accValue(VARIANT varID, BSTR *pszValue) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accDescription(VARIANT varID, BSTR *pszDescription) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accRole(VARIANT varID, VARIANT *pvarRole) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accState(VARIANT varID, VARIANT *pvarState) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accHelp(VARIANT varID, BSTR *pszHelp) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accHelpTopic(BSTR *pszHelpFile, VARIANT varID, LONG *pidTopic) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accKeyboardShortcut(VARIANT varID, BSTR *pszKeyboardShortcut) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accFocus(VARIANT *pvarID) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accSelection(VARIANT *pvarID) = 0;

virtual HRESULT STDMETHODCALLTYPE get_accDefaultAction(VARIANT varID, BSTR *pszDefaultAction) = 0;

virtual HRESULT STDMETHODCALLTYPE accSelect(LONG flagsSelect, VARIANT varID) = 0;

virtual HRESULT STDMETHODCALLTYPE accLocation(LONG *pxLeft, LONG *pyTop, LONG *pcxWidth, LONG *pcyHeight, VARIANT varID) = 0;

virtual HRESULT STDMETHODCALLTYPE accNavigate(LONG navDir, VARIANT varStart, VARIANT *pvarEnd) = 0;

virtual HRESULT STDMETHODCALLTYPE accHitTest(LONG xLeft, LONG yTop, VARIANT *pvarID) = 0;

virtual HRESULT STDMETHODCALLTYPE accDoDefaultAction(VARIANT varID) = 0;

virtual HRESULT STDMETHODCALLTYPE put_accName(VARIANT varID, BSTR pszName) = 0;

virtual HRESULT STDMETHODCALLTYPE put_accValue(VARIANT varID, BSTR pszValue) = 0;

};C++ snippet of the IAccessible Interface.

With the help of Window's Common Controls and Microsoft's Foundation Class (MFC), developers could have a default collection of IAccessible objects from their given interface readily available. This lessened the burden on developers to craft custom IAccessible-derived classes for describing their interface by including it in the UI Framework they were (most likely) already using. Though developers were able to completely override these default implementations and describe the visual tree to the user in different ways.

While user interfaces have become much more complex since Windows 95, this concept of accessibility included in UI frameworks carries over today with WinForms, WPF, UWP and many 3rd party frameworks. Furthermore, the increasing amount of metadata and user interactions brought along a new API for accessibility in Windows: Microsoft's UI Automation (UIA). At this point, it is worth mentioning that the more generic name of this new API is because many of the same accessibility APIs can also be leveraged for out-of-process UI testing and process automation.

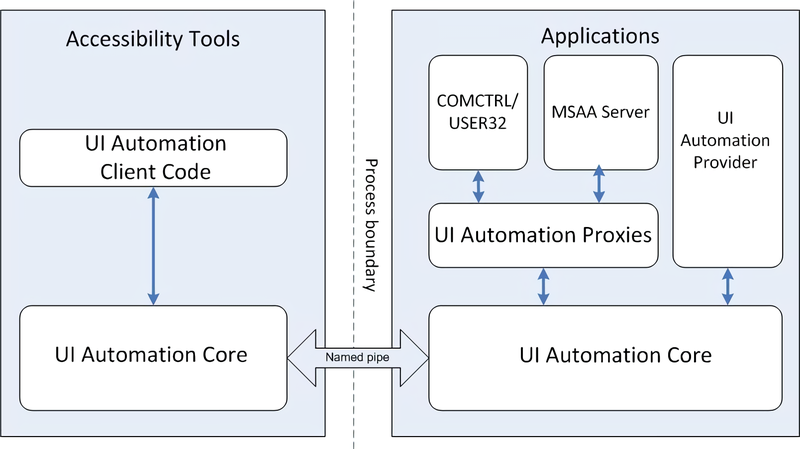

Microsoft UI Automation Architecture Diagram. Programs now communicate through pipes in the OS and the system provides a bridge to legacy accessibility interfaces.

(Image is Public Domain)

Tools of the trade

Microsoft's UI Automation API brings in a ton of information to describe your app but, how do we validate that they're providing the information we expect to users? At first, you might be tempted to just use any Windows Accessibility tool (like Narrator) and check that it works as expected. While you'll definitely want to do a pass with Windows Narrator on your entire application, there are developer tools to inspect how your app interacts with the Automation Framework.

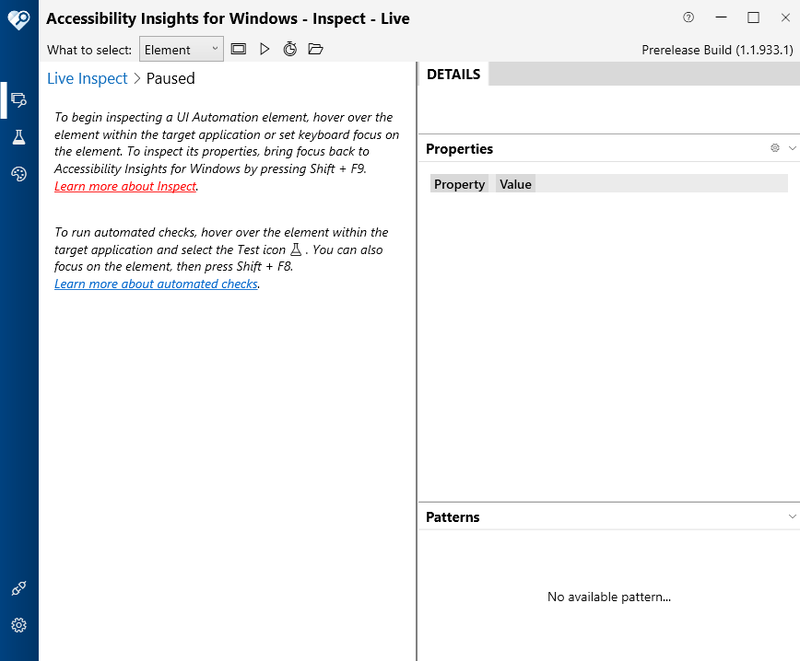

Accessibility Insights for Windows - Splash Screen

(Copyright - Microsoft Corporation)

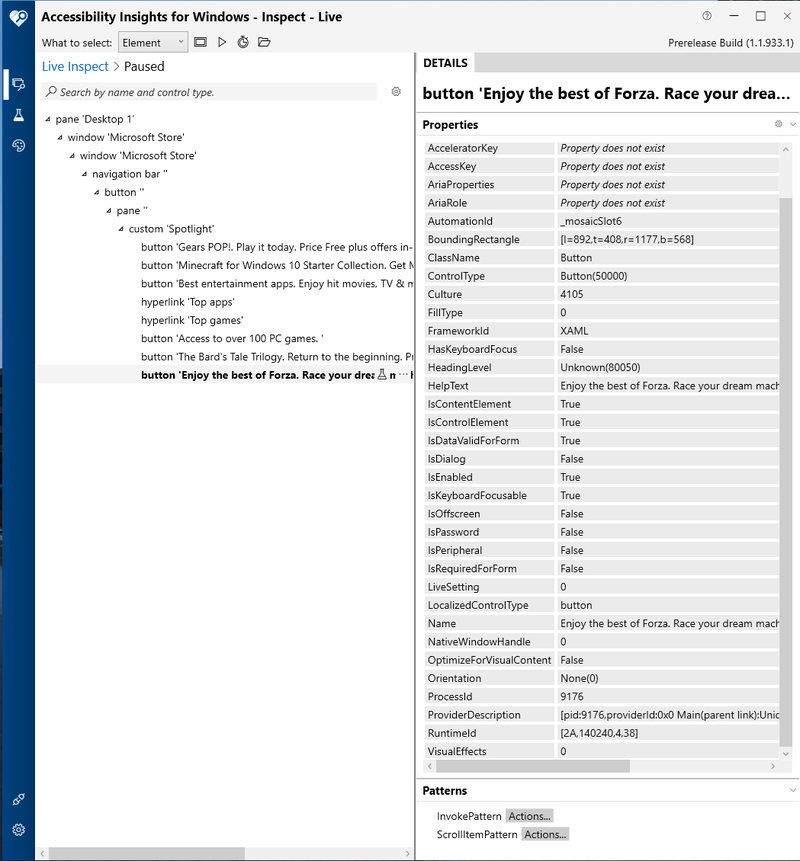

A good, friendly tool for checking apps is Accessibility Insights. There's a web version and a native desktop app, and both are free and open-source software. Because we're talking about OS APIs, the native version is most relevant to this discussion. With it, you can easily check what a particular Window or Widget is conveying to the Automation API, along with the Visual Tree it's exposing in the process.

Accessibility Insights Main Window. The application starts out in Live Inspect Mode by default.

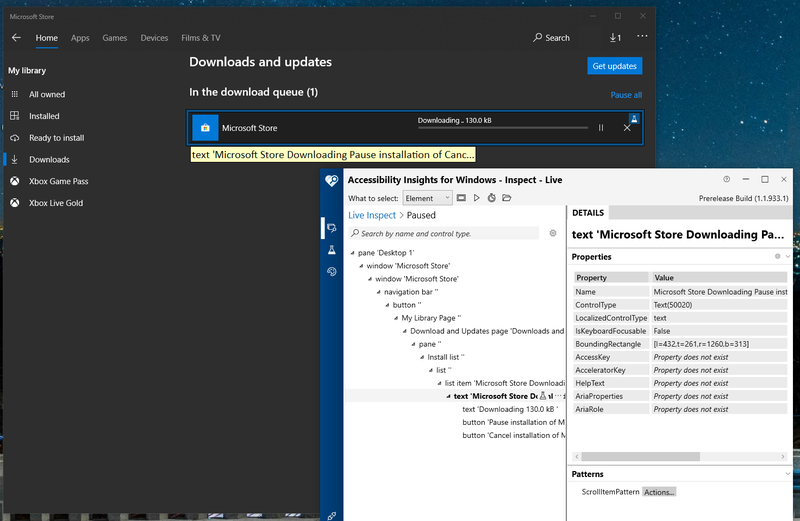

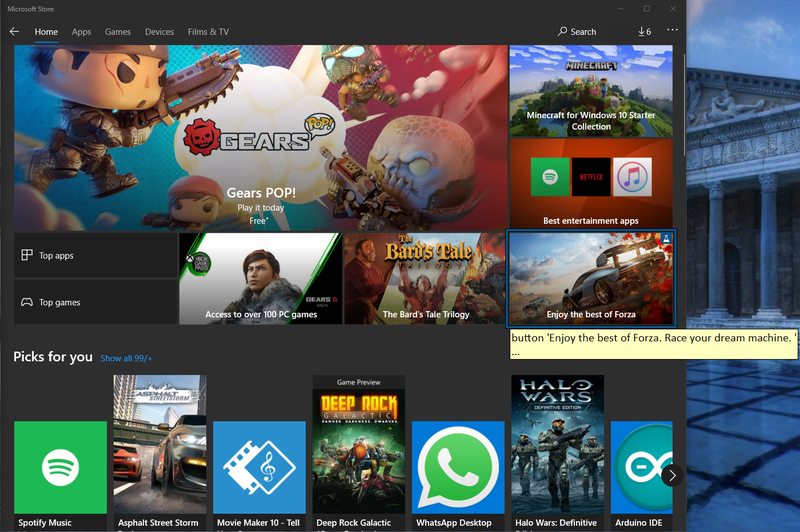

Let's try checking an actual UWP app under accessibility insights. The Windows Store is a pretty good example to run through.

Live Inspect of the Microsoft Store downloads and updates page. Here, Accessibility Insights shows the UI Tree of the application.

Accessibility Insights has a number of extremely useful modes and tools. As shown above, it'll default to the Live Inspect mode where it tries to obtain information about elements that are immediately in focus (usually under the user's cursor or the current keyboard focus). In this mode, we're displayed two columns: The live Visual Tree (as navigable by keyboard) and the Detailed view. The detailed view itself is split into two rows, with the first, larger one showing the Text Box properties, and the second row showing Automation Patterns. In my case, I've highlighted the Microsoft Store update row in that list view.

In the UI Automation world, Control Patterns describe ways in which an element can be programatically manipulated. These patterns are usually based upon UI interaction and a control can implement none or multiple of them at the same time. Accessibility Insights allows you to interact with the program through those patterns in a way that invokes the UI Automation API.

Above: The main page of the Microsoft Store showing a highlight and tooltip where the focus is currently

Below: Accessibility Insights inspecting the focused area and displaying a list of all explicitly assigned properties that are being retrieved through the Microsoft UI Automation API.

From inspecting the main page of the Microsoft Store, we see that the current focus is on the Forza page (I navigated there and left the cursor hovering over it). That button is part of a custom "Spotlight" control in the Visual Tree, along with several other buttons and links. These serve to linearly describe the UI to a visually impaired user. Notice also that the name of the button is a somewhat long string. That's because it's the exact text a screen reader would read upon obtaining the AutomationElement corresponding to that item. One important thing to note is the ProviderDescription property, which indicates what provider made this AutomationElement. From it's value, we can likely infer that it was automagically generated from XAML

ProviderDescription [pid:9176,providerId:0x0 Main(parent link):Unidentified Provider (unmanaged:Windows.UI.Xaml.dll)]

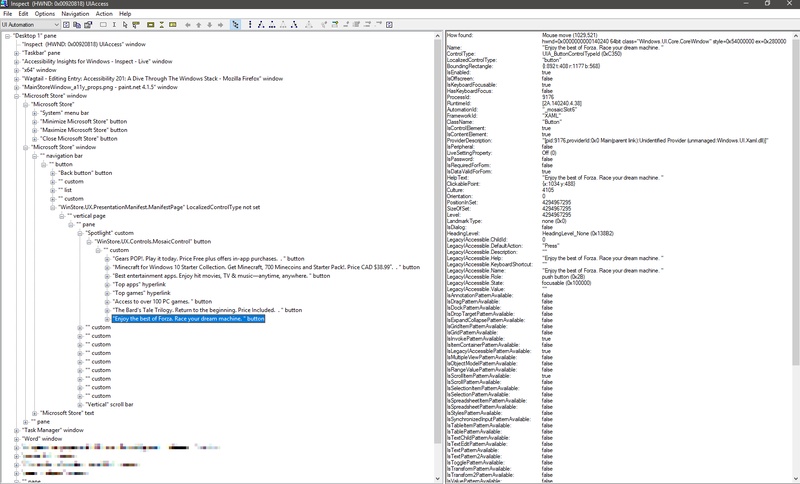

Accessibility Insights for Windows is an awesome tool and it's a lot easier if you try it out on your own rather than having me attempt to describe in its entirety here. However, it is one of many useful tools at your disposal. One of the most powerful programs in Windows is the aptly named "inspect.exe". While it's now considered a legacy program, it can give you one of the most comprehensive looks into how the OS sees your application, with a visual tree that has as the root the Desktop itself. It can be quite overwhelming if you're jumping for the first time, but it does come with any Windows 10 SDK you've installed.

Inspect.exe conveys massive amounts of information and can be used to check keyboard focus stealing, Framework and OS level issues and even verify applications work under UI Automation and MSAA client APIs

Wrap-up and resources

If you are working on a tough accessibility-related issue in your software or getting started into this, I'd highly recommend checking out Guy Barker's post on MSDN. He has a wealth of posts on addressing Windows Narrator issues and many other accessibility related things. As for the APIs themselves, you'll find even more info over at the Windows Dev Center.

Hopefully, this article has given you enough of the technical bits that are involved in making software accessible and will help when facing bugs or issues related to this in the future. Though I foucsed on Windows specifically, the more general takeaway is that Accessibility is built on top of IPC methods like COM. In fact, the AT-SPI implementation on linux was initially made possible using CORBA (which is very similar to Distrubuted COM). But I'll leave Linux and Web interfaces for another day.